Amazon Bedrock Flows: Now Generally Available with Enhanced Safety and Traceability

AWS excited to announce the general availability of Amazon Bedrock Flows (previously known as Prompt Flows), a robust feature designed to streamline the creation, deployment, and scaling of generative AI applications on AWS. With an emphasis on enhanced safety and traceability, this solution caters to businesses seeking efficient and secure ways to leverage generative AI capabilities in production.

Bedrock Flows empowers developers and businesses to leverage generative AI effectively, facilitating the development of advanced and efficient AI-powered solutions for customers.

https://aws.amazon.com/bedrock/flows/

Simplifying Generative AI Application Development

Amazon Bedrock Flows is a significant step forward in simplifying how developers and organizations implement generative AI models. By abstracting the complexity of integrating foundational models, Bedrock Flows provides pre-configured workflows that reduce the need for deep technical expertise in AI, empowering teams to focus on innovation.

Enhanced Safety and Compliance

A critical component of generative AI is ensuring ethical and secure usage. Bedrock Flows incorporates advanced safety mechanisms to mitigate risks such as data leakage and unauthorized access. Traceability features enable organizations to track and audit the application of AI models, ensuring adherence to compliance requirements and ethical guidelines.

Core Features of Amazon Bedrock Flows

Pre-Built Workflows: Accelerate time-to-market with ready-to-use configurations tailored for various generative AI use cases.

Model Customization: Seamlessly integrate proprietary or third-party foundational models, allowing customization to meet specific business needs.

Scalability: Utilize the scalable architecture of AWS to handle fluctuating workloads with ease, ensuring performance stability.

Integrated Safety Controls: Leverage in-built tools for monitoring and mitigating risks, such as overfitting or misuse of AI capabilities.

Comprehensive Traceability: Enable detailed auditing of model behavior and interactions for improved transparency and governance.

Quickly build generative AI workflows visually.

Test and deploy faster with serverless infrastructure.

Key advantages include:

Streamlined creation of generative AI workflows using a user-friendly visual interface.

Easy incorporation of advanced foundation models (FMs), prompts, agents, knowledge bases, guardrails, and other AWS tools.

Customizable workflows tailored to align with specific business logic.

Minimization of time and effort required for testing and deploying AI workflows, thanks to SDK APIs and serverless infrastructure.

New Features in Amazon Bedrock Flows

Generative AI adoption requires robust safety measures and clear workflow visibility. Amazon Bedrock Flows introduces two new features to address these needs, empowering organizations to create secure and traceable AI applications:

1. Enhanced Safety

The addition of Amazon Bedrock Guardrails enables organizations to filter harmful content and exclude unwanted topics. Guardrails are available in:

Prompt Nodes: Enforce strict controls over interactions with foundation models.

Knowledge Base Nodes: Regulate responses derived from your knowledge base.

2. Enhanced Traceability

Organizations can now validate and debug workflows with comprehensive input/output tracking and inline validation, ensuring complete visibility into execution. Key enhancements include:

Detailed tracing for input and output nodes.

Execution path insights, including input, output, errors, and execution time for each node.

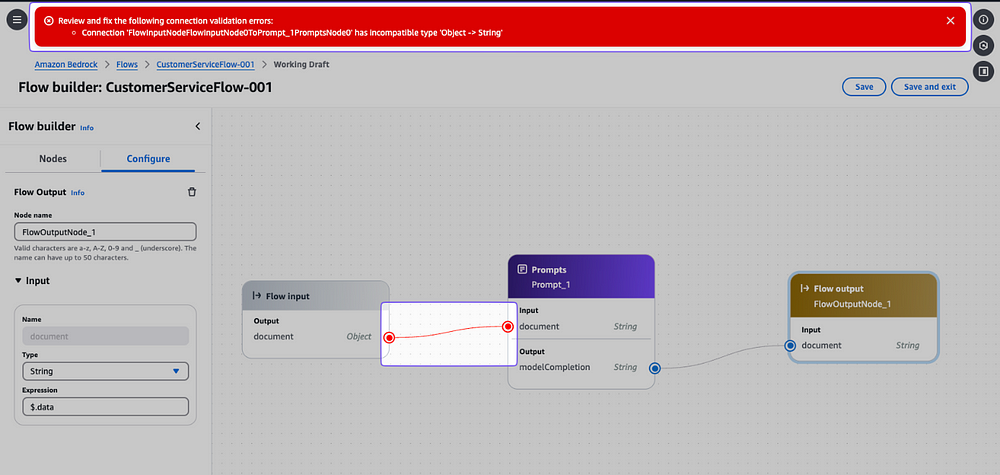

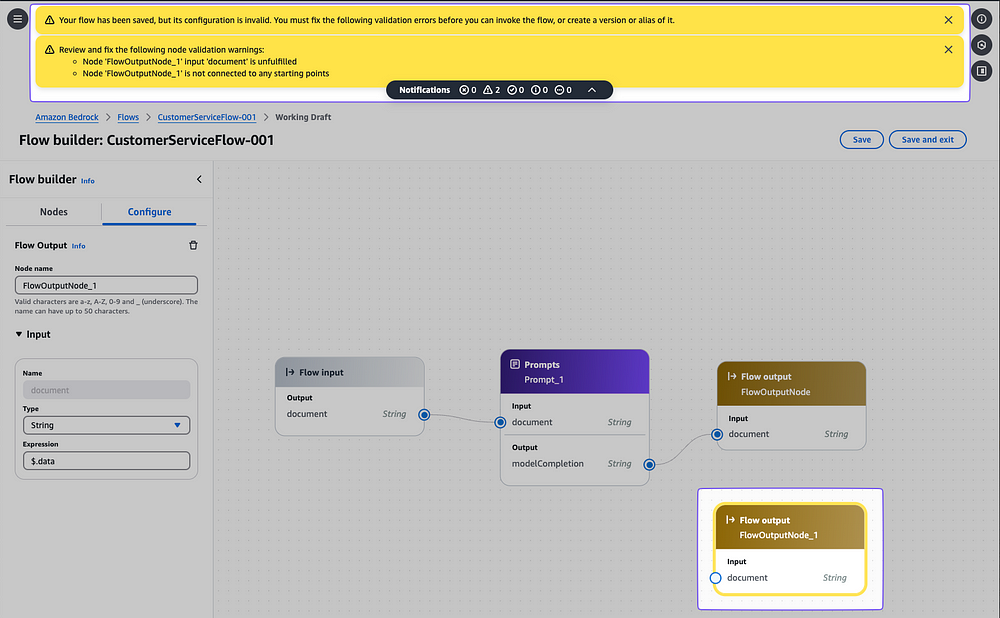

Inline validation status directly within the visual builder.

Practical Application: E-commerce company Challenges

Consider An e-commerce company implementing a customer service chatbot with Amazon Bedrock Flows. Their challenges include:

Chatbot responses occasionally reveal sensitive customer data.

Inconsistent tone and quality in customer interactions.

Troubleshooting workflow issues is time-consuming.

Difficulty ensuring compliance with company policies and regulations.

Limited visibility into performance bottlenecks that degrade customer experience.

With Amazon Bedrock Flows, E-commerce company can overcome these hurdles, creating a more secure, efficient, and policy-compliant chatbot.

Real-World Applications

Organizations across industries are leveraging Amazon Bedrock Flows to transform operations and unlock value through generative AI. From automating content creation to enhancing customer support with intelligent chatbots, the use cases are diverse and impactful. For instance, marketing teams can generate tailored ad copy, while R&D departments accelerate product innovation with automated ideation tools.

Getting Started with Amazon Bedrock Flows

Adopting Amazon Bedrock Flows is straightforward, with guided documentation and support available to assist at every stage. AWS customers can now harness this technology to build robust generative AI applications, ensuring both efficiency and ethical deployment.

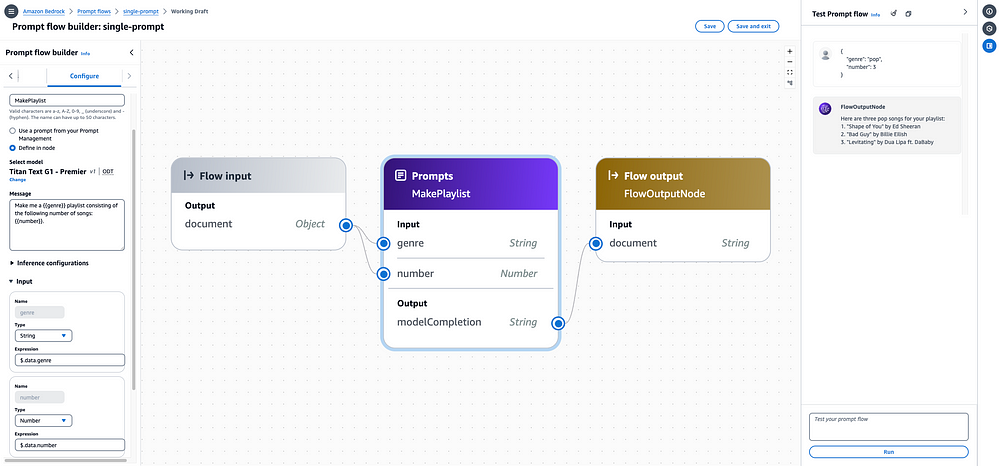

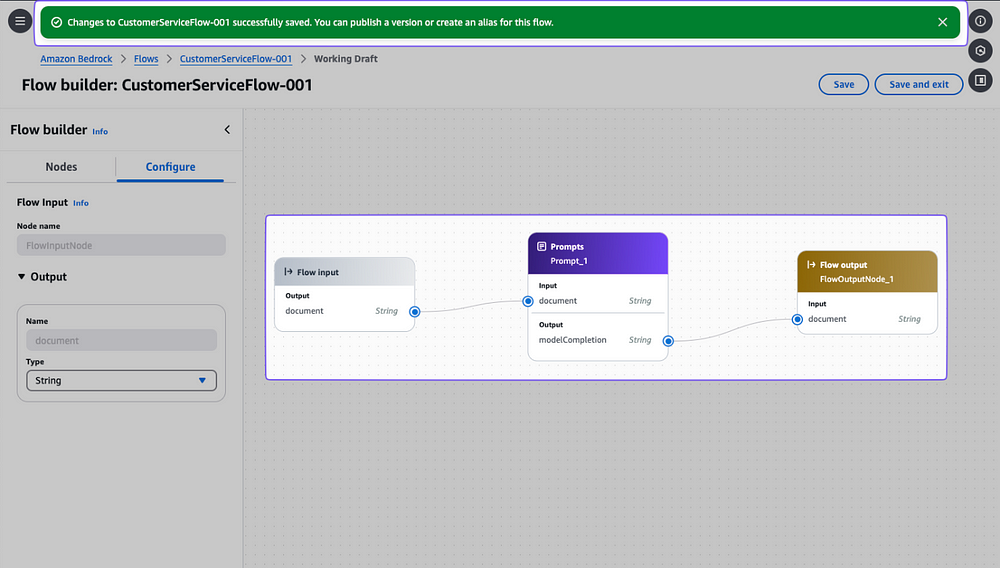

Create a flow with a single prompt

The following image shows a flow consisting of a single prompt, defined inline in the node, that builds a playlist of songs, given a genre and the number of songs to include in the playlist.

To build and test this flow in the console

To create a flow in the Console tab at Create a flow in Amazon Bedrock. Enter the Flow builder.

Set up the prompt node:

a. From the Flow builder left pane, select the Nodes tab.

b. Drag a Prompt node into your flow in the center pane.

c. Select the Configure tab in the Flow builder pane.

d. Enter MakePlaylist as the Node name.

e. Choose Define in node.

f. Set up the following configurations for the prompt:

i. Under Select model, select a model to run inference on the prompt.

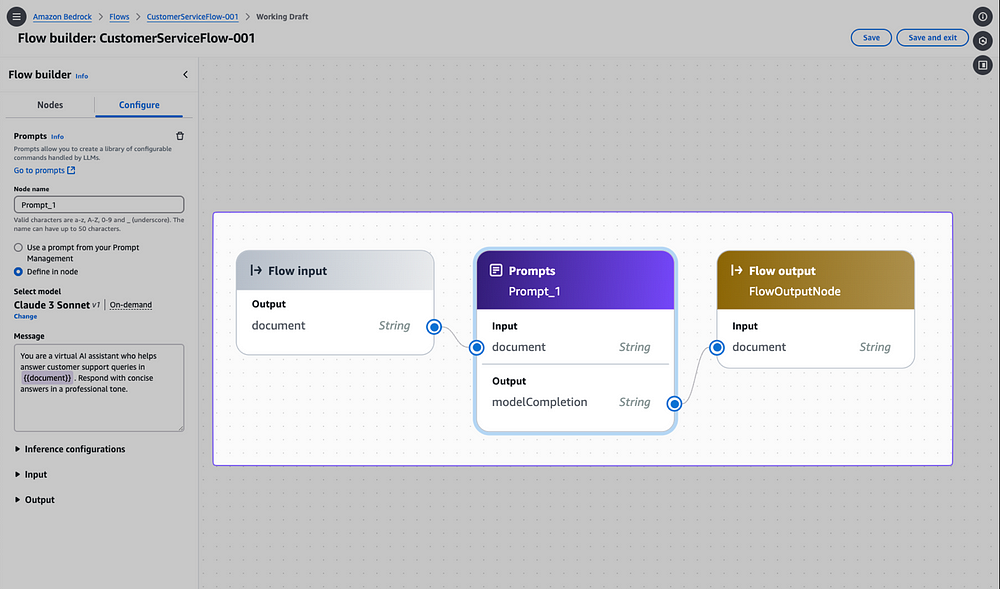

ii. In the Message text box, enter Make me a {{genre}} playlist consisting of the following number of songs: {{number}}.. This creates two variables that will appear as inputs into the node.

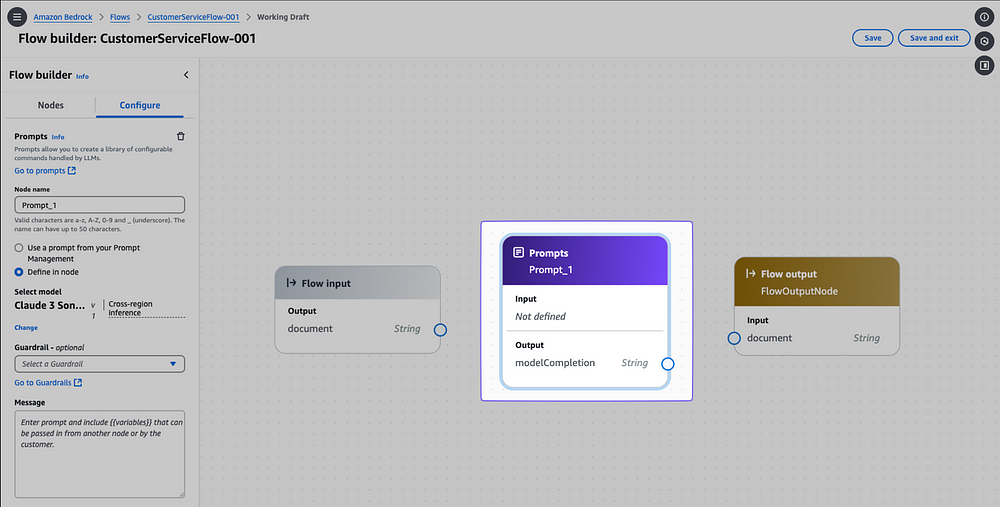

e. Expand the Inputs section. The names for the inputs are prefilled by the variables in the prompt message. Configure the inputs:

This configuration means that the prompt node expects a JSON object containing a field called genre that will be mapped to the genre input & a field called number that will be mapped to the number input.

h. You can’t modify the Output. Response will from the model, returned as a string.

3. Choose the Flow input node & select the Configure tab.

Select Object as the Type. Flow invocation will expect to receive a JSON object.

4. Connect your nodes to complete the flow:

a. Drag a connection from the output node of the Flow input node to the genre input in the MakePlaylist prompt node.

b. Repeat the same steps for Flow input node to the number input in the MakePlaylist prompt node.

c. Repeat the same steps for modelCompletion output in the MakePlaylist prompt node to the document input in the Flow output node.

5. Choose Save to save flow.

6. Test flow by entering the following JSON object is the Test flow pane on the right.

Click Run and the flow should return a model response.

{ "genre": "pop", "number": 3 }

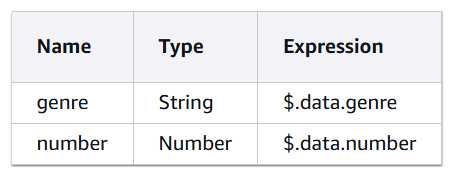

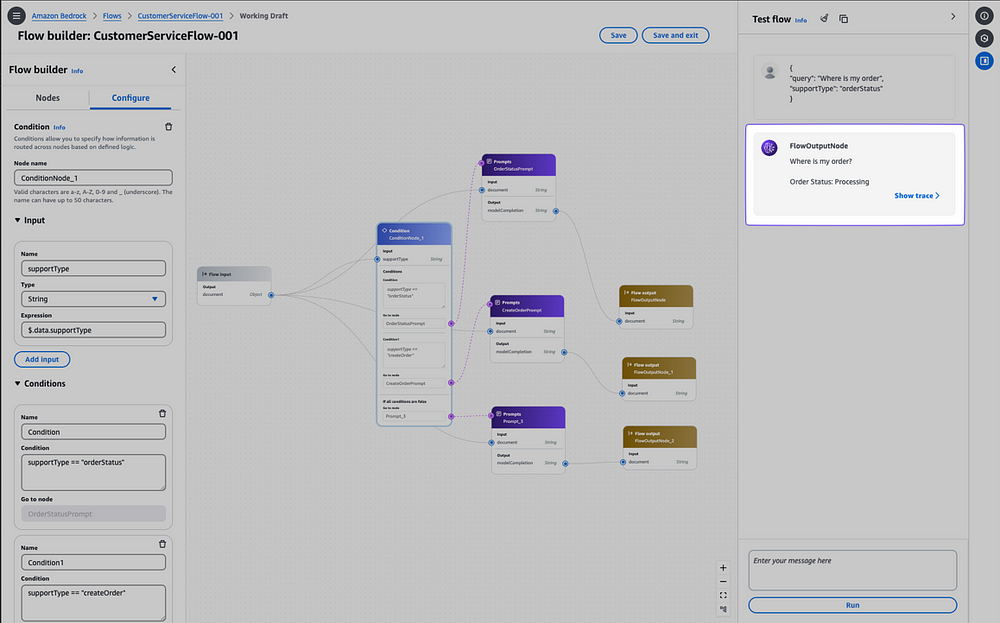

Create a flow with a condition node

Shows a flow with one condition node returns one of three possible values based on the condition that is fulfilled:

Prerequisites for Implementing New Capabilities in Amazon Bedrock Flows

Before utilizing the enhanced safety and traceability features in Amazon Bedrock Flows, ensure the following prerequisites are met:

An AWS account

In Amazon Bedrock:

Create and test your base prompts for customer service interactions in Prompt Management.

Set up your knowledge base with relevant customer service documentation, FAQs, and product information.

Configure any auxiliary AWS services needed for your customer service workflow (for example, Amazon DynamoDB for order history).In Amazon Bedrock Guardrails:

* Create a guardrail configuration for customer service interactions (for example, CustomerServiceGuardrail) with:

- Content filters for inappropriate language and harmful content

- Personally identifiable information (PII) detection and masking rules for customer data

- Custom word filters for company-specific terms

* Contextual grounding checks to ensure accurate information

* Test and validate your guardrail configuration.

* Publish a working version of your guardrail.Required IAM permissions:

Access to Amazon Bedrock Flows

Permissions to use configured guardrails

Appropriate access to any integrated AWS services

Next Steps

Once these prerequisites are in place, you can seamlessly implement the new capabilities to enhance your customer service workflows with Amazon Bedrock Flows.

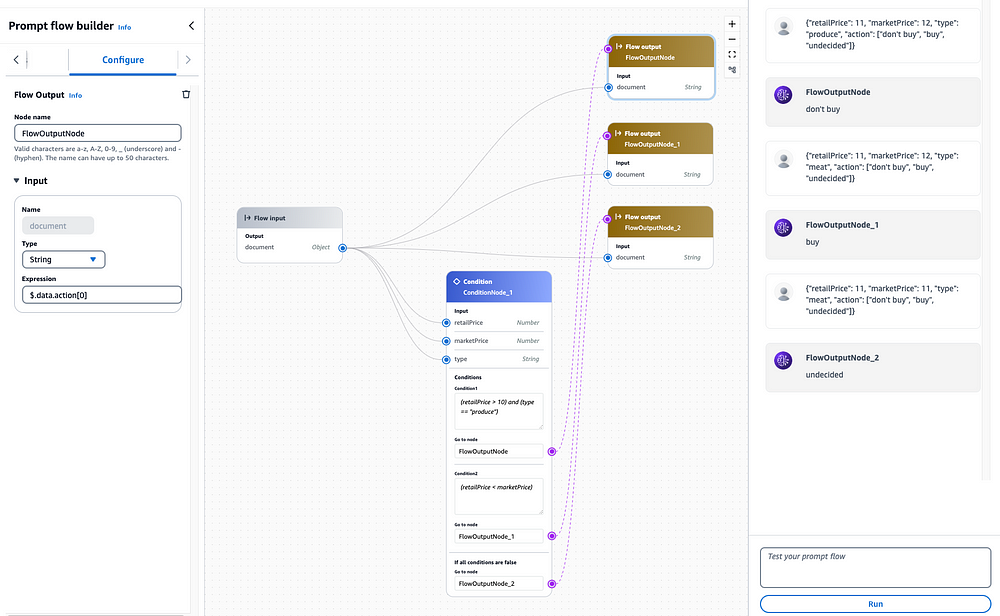

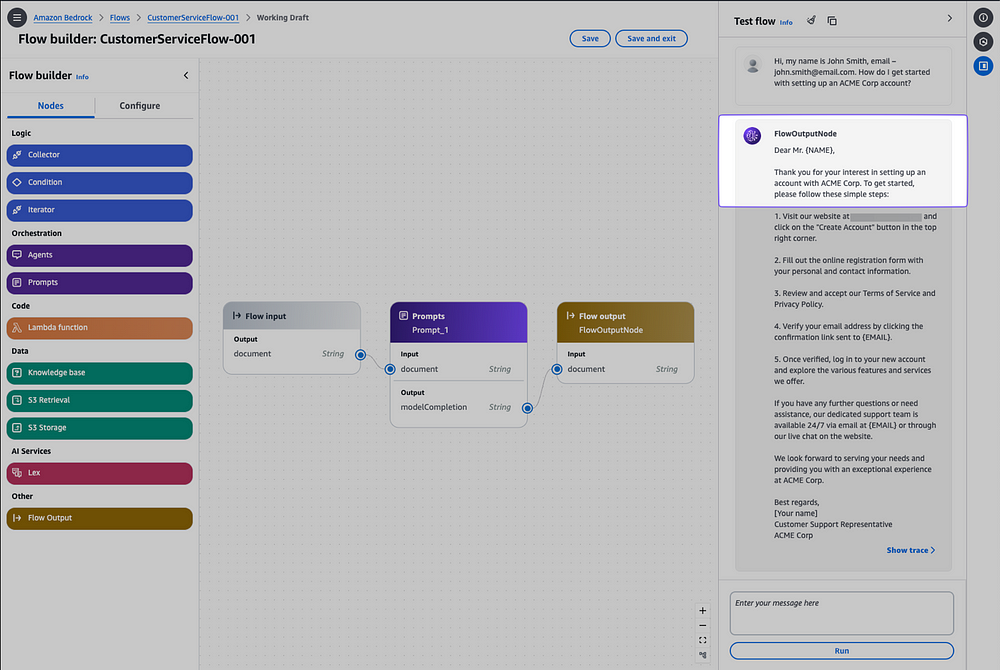

Enabling enhanced safety in Flows

For Customer service chatbot, implementing guardrails helps ensure safe, compliant, and consistent customer interactions.

Enable guardrails in both Prompt node and Knowledge base node:

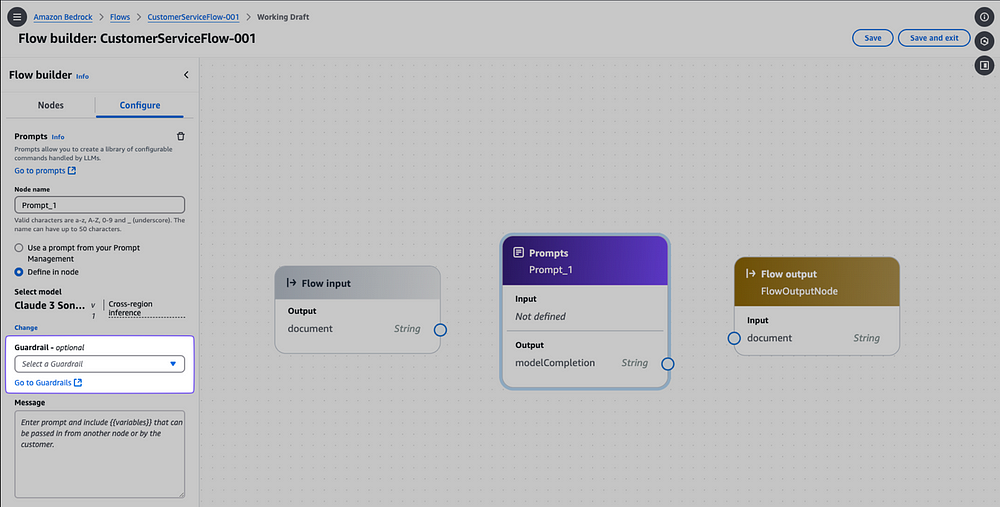

1. In AWS Console for Amazon Bedrock, open the Prompt node or Knowledge base node in your customer service flow where you want to add guardrails. Create a new flow if required.

2. In the node configuration panel, locate the Guardrail section.

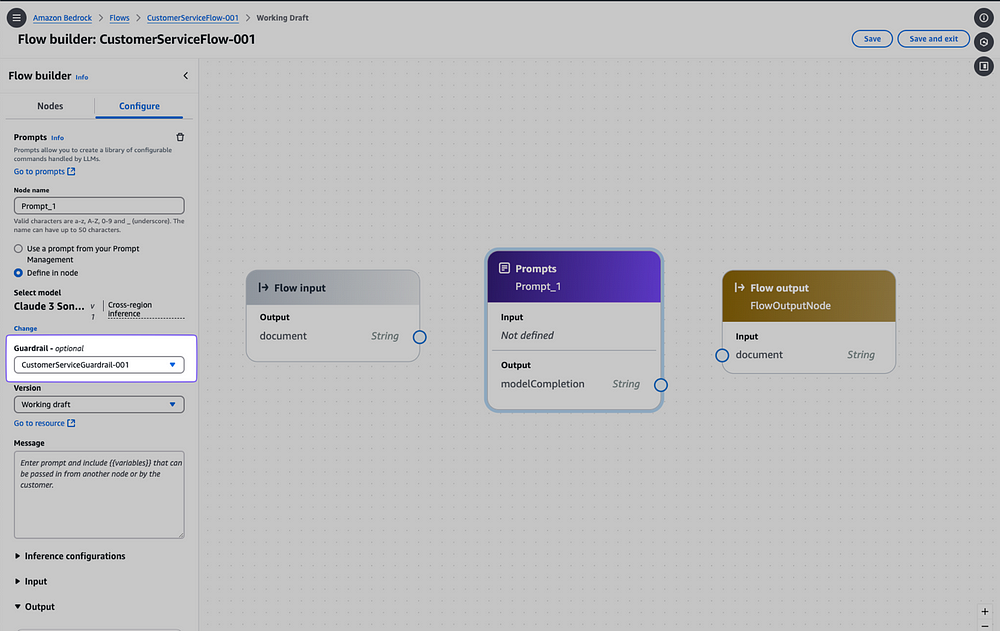

3. Select an existing guardrail from the dropdown menu.

4. In this instance, CustomerServiceGuardrail-chatbot is configured to:

Mask customer PII data (name & email)

Block inappropriate language & harmful content

Have responses align with company policy

Maintain professional tone in responses

5. Choose the appropriate version of your guardrail.

6. Enter your prompt message for customer service.

7. Connect your Prompt node to the flow’s input & output nodes.

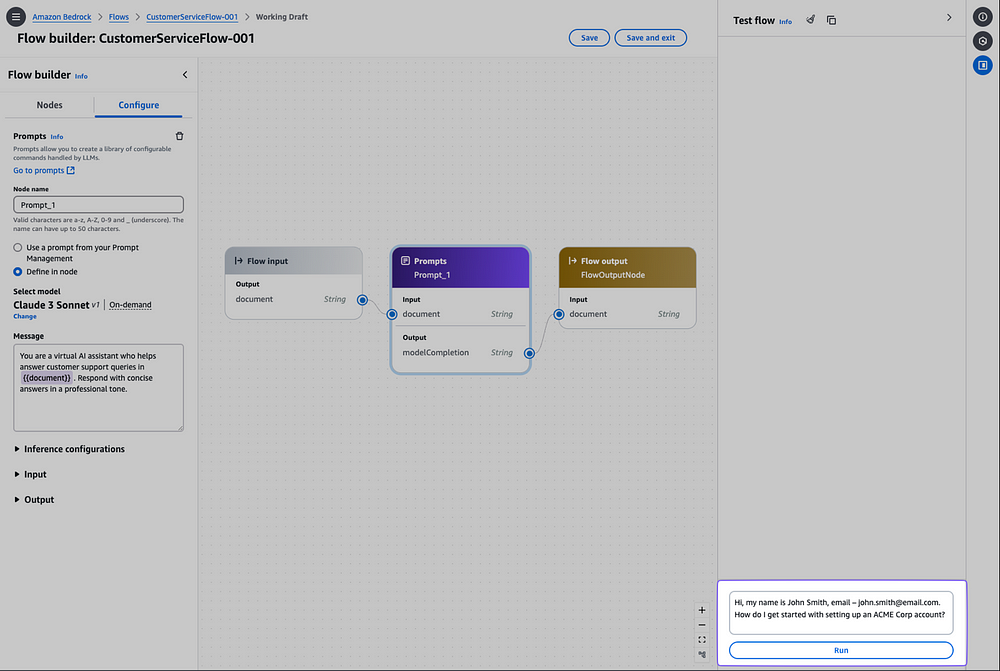

8. Test your Flows with the implemented guardrails.

9. In the Test flow shown on the right pane of the interface, you can see how the model response handles sensitive information.

Original response: “ Dear Mr. John Smith… ”

Guardrail response: “ Dear Mr. {NAME}… ”

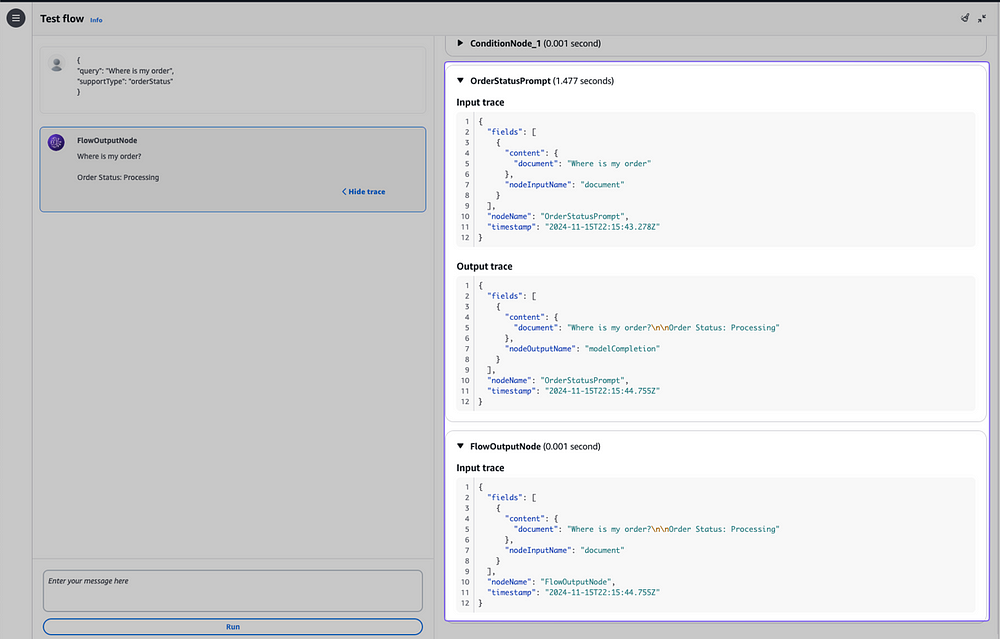

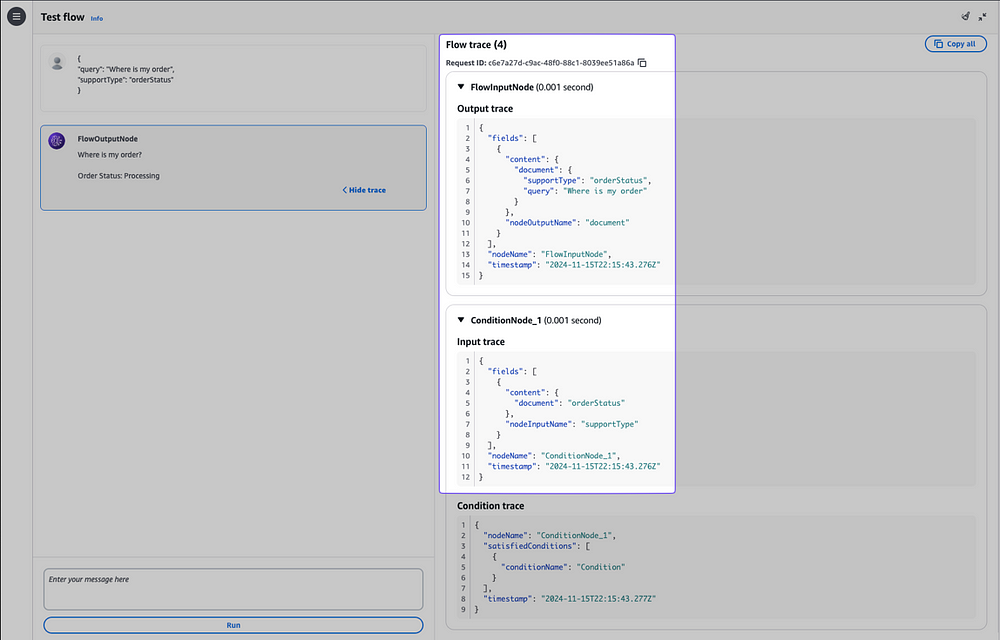

Enhanced traceability with Flows Trace View

The new Flows Tracing capability now provides detailed visibility into the execution of the flows, enhancing debugging capability with Trace view and inline validations.

This monitoring solution helps developers monitor, debug, and optimize their GenAI workflows more effectively.

Key benefits of enhanced traceability with Flows Trace View:

Complete execution path with visibility through Trace view

Detailed input/output tracing for each node

Errors, warnings, and execution timing for every node

Quick identification of bottlenecks and issues

Faster root-cause analysis for errors

Monitor response times for user interactions

Identify patterns in user queries that cause delays

Debug issues in the conversation flow

Optimize the user experience

To use the Trace view:

1. In Amazon Bedrock console, open your flow and test it.

2. After running your flow, choose Show trace to analyze.

3. Review the Flow Trace window showing:

Response times for each step

Guardrails are applied

How customers inputs are processed

Performance bottlenecks

4. Analyze execution details, including:

Processing steps

Response generation & validation

Time taken by each step

Error details

Inline validation status

Flows visual builder and SDK now include intuitive node validation capabilities:

Visual Builder:

- Green indicates → a valid node configuration.

- Red indicates → an invalid node configuration.

- Yellow indicates → a warnings node configuration.

These validation capabilities help developers & AI engineers quickly identify & resolve potential issues by giving real-time validation feedback.

Workshops:

**No-code generative AI application development with Amazon Bedrock Flows**

https://catalog.us-east-1.prod.workshops.aws/workshops/ca98ae19-48a5-4f95-90e3-56bbfeaae0fc/en-US**Simplifying the Prompts Lifecycle with Prompt Management and Prompt Flows for Amazon Bedrock**

https://catalog.us-east-1.prod.workshops.aws/workshops/c81935bc-0b43-4bd6-bd01-db45f847d6bd/en-US

Some of Resources here from AWS Documentations and Blog.

AWS user guide for Guardrails integration and Traceability.